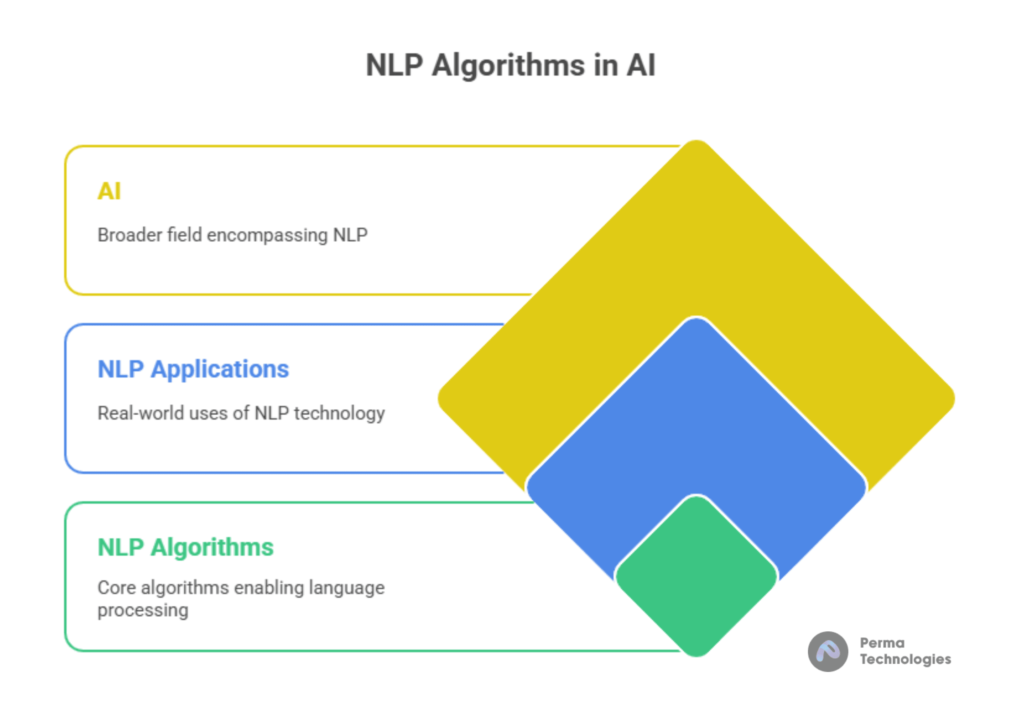

Natural Language Processing (NLP) is a cornerstone of Artificial Intelligence (AI), enabling machines to understand, interpret and respond to human language. Natural Language Processing algorithms are revolutionizing industries; for instance, they are widely applied in chatbots, voice assistants, sentiment analysis, machine translation, and legal document review.

In this blog, we’ll first dive into the key AI algorithms driving modern NLP. Next, we’ll explore how these algorithms function, and finally, evaluate their real world impact using updated data and use cases from 2024–2025.

1. Tokenization and Text Preprocessing Algorithms

Before any sophisticated language understanding can occur, raw text must be prepared. This involves several preprocessing algorithms:

Sentence and Word Tokenization

- Algorithm: Both rule based and statistical tokenizers, however, play crucial roles in text processing.

- Purpose: Breaking down text into sentences and words

- Libraries: NLTK, spaCy, Hugging Face Tokenizers

Lemmatization and Stemming

- Algorithm: Porter Stemmer, WordNet Lemmatizer

- Purpose: Reducing words to their root forms (e.g., “running” → “run”)

Named Entity Recognition (NER)

- Algorithm: Conditional Random Fields (CRF), BERT based models

- Use: Identifying names, organizations, dates, etc.

2024 Trend: As a result, most modern Natural Language Processing stacks now utilize Transformer based tokenizers, such as SentencePiece or WordPiece, which effectively capture subword units and consequently reduce vocabulary size by up to 40% all without compromising semantic accuracy.

2. Statistical Language Models

Before the deep learning revolution, statistical methods dominated Natural Language Processing. While now largely surpassed, these still serve educational and foundational purposes.

N-gram Models

- Algorithm: Predicts the next word based on the previous (n-1) words

- Strength: Simple, interpretable

- Limitation: However, it fails when dealing with long dependencies.

Hidden Markov Models (HMM)

- Used for: POS tagging, speech recognition

- Technique: To begin with, probabilistic modeling of sequences helps in capturing uncertainty in sequential data.

Decline in Use: According to the 2024 Stack Overflow Developer Survey, only 6% of Natural Language Processing practitioners use HMMs or n grams for production level NLP tasks.

3. Machine Learning Algorithms for NLP Tasks

Before the advent of deep learning, traditional Machine Learning played a major role in Natural Language Processing.

Naive Bayes

- Used for: In addition to spam detection, document classification is another common application of machine learning.

- Advantage: In addition, it operates quickly and handles small datasets efficiently.

Support Vector Machines (SVM)

- Use Case: Text classification (sentiment analysis, news categorization)

- Strength: Performs well with high dimensional sparse data (e.g., TF-IDF features)

Decision Trees and Random Forests

- Use: To begin with, topic modeling helps identify the main themes within a text; furthermore, keyword extraction pinpoints the most relevant terms associated with those themes.

- Insight: Moreover, it offers explainability in classification decisions.

Shift to Deep Learning: In the last 3 years, deep learning adoption for Natural Language Processing has grown from 55% to 83% (Source: NVIDIA AI Trends Report, 2024).

4. Deep Learning Algorithms for NLP

Currently regarded as the gold standard of NLP, this technology, moreover, powers a wide range of applications from ChatGPT to Google Translate.

Recurrent Neural Networks (RNNs) and LSTMs

- Use: Sequence modeling (e.g., language generation, translation)

- Strength: Handles temporal data

- Limitation: Struggles with long dependencies

GRUs (Gated Recurrent Units)

- More efficient than LSTMs, similar performance

- Therefore, it is ideal for use in lightweight Natural Language Processing applications, such as mobile translation.

Convolutional Neural Networks (CNNs) for Text

- Moreover, it is effective for both sentence classification and text similarity tasks.

- Fast to train but less effective with long sequences

2024 Insight: RNNs now power less than 10% of production NLP models, largely replaced by Transformers (Source: Hugging Face Developer Index).

5. Transformers: The Game Changer in NLP

The introduction of Transformers by Vaswani et al. in 2017 transformed Natural Language Processing forever.

Transformer Architecture

- Core Feature: Attention mechanism (specifically, self attention)

- Benefits:

- Captures long term dependencies

- Parallelizable (unlike RNNs)

- State of the art accuracy

- Benefits:

Popular Transformer Models

| Model | Year | Params | Primary Use |

| BERT | 2018 | 110M | Bidirectional text understanding |

| GPT-3 | 2020 | 175B | Text generation, chatbots |

| T5 | 2020 | 11B | Text to text tasks |

| PaLM 2 | 2023 | ~540B | Multilingual & reasoning |

| GPT-4 | 2023 | ~1T (estimate) | General purpose multimodal AI |

| Gemini 1.5 | 2024 | >1.5T | Unified vision language reasoning |

NLP Model Growth: Since 2020, the size of top performing Natural Language Processing models has grown 10x every 18 months, while efficiency (tokens per dollar) has increased by ~8x (Source: OpenAI & Google DeepMind Whitepapers, 2025).

6. Pretrained Language Models (PLMs)

Pretraining + fine-tuning is now the dominant paradigm.

BERT (Bidirectional Encoder Representations from Transformers)

- Uses masked language modeling to understand context

- Best for: classification, NER, Q&A tasks

GPT (Generative Pre trained Transformer)

- Unidirectional model

- Best for: text generation, dialogue, summarization

RoBERTa, XLNet, ALBERT

- Variants of BERT optimized for speed, memory, or permutation based learning

Fine tuning Impact: A fine tuned domain specific BERT model can outperform general models by 20–30% on specific benchmarks (e.g., LegalBERT for legal text, BioBERT for biomedical Natural Language Processing).

7. Reinforcement Learning with Human Feedback (RLHF)

Why it Matters

- Models like ChatGPT use RLHF to align outputs with human values.

- RLHF refines generative responses beyond pure likelihood prediction.

Case Study: OpenAI’s RLHF training pipeline reduced toxic output in ChatGPT by 88%, according to an internal audit in late 2023.

8. Prompt Engineering and Few Shot Learning

In 2023–2024, prompt design became as important as architecture.

Few shot and zero shot learning

- As a result, models are able to perform tasks with little or no task-specific training.

- GPT-4 and Gemini models can follow natural instructions without fine tuning

Chain of Thought (CoT) Prompting

- Enhances reasoning by encouraging models to “think aloud”

Effectiveness: CoT prompting improves reasoning accuracy in math and logic tasks by 30–40% in large models like PaLM and Gemini (Google Research, 2024).

9. Multimodal NLP and Vision Language Models

The next evolution in Natural Language Processing, moreover, involves multimodal understanding by combining text, image, audio, and video.

Algorithms: Flamingo, Gemini, GPT-4-Vision

- These use cross attention between modalities

- Enable use cases like:

- Visual question answering

- Image captioning

- Text from scanned documents

2025 Use Case: Gemini 1.5 can summarize a scientific paper and explain its figures in natural language with 92% accuracy, according to Google DeepMind’s latest benchmark (Vision Language Reasoning Tasks 2025).

10. Ethical and Responsible NLP Algorithms

Bias Detection Algorithms

- To begin with, use adversarial testing to detect potential bias in sentiment analysis; furthermore, apply it to hiring and judicial texts to ensure fairness and accuracy

Differential Privacy & Federated NLP

- Protect user data while training large models on decentralized devices

Regulatory Insight: Under the EU AI Act (2025), all high risk Natural Language Processing systems (e.g., resume screening) must include fairness auditing algorithms during deployment.

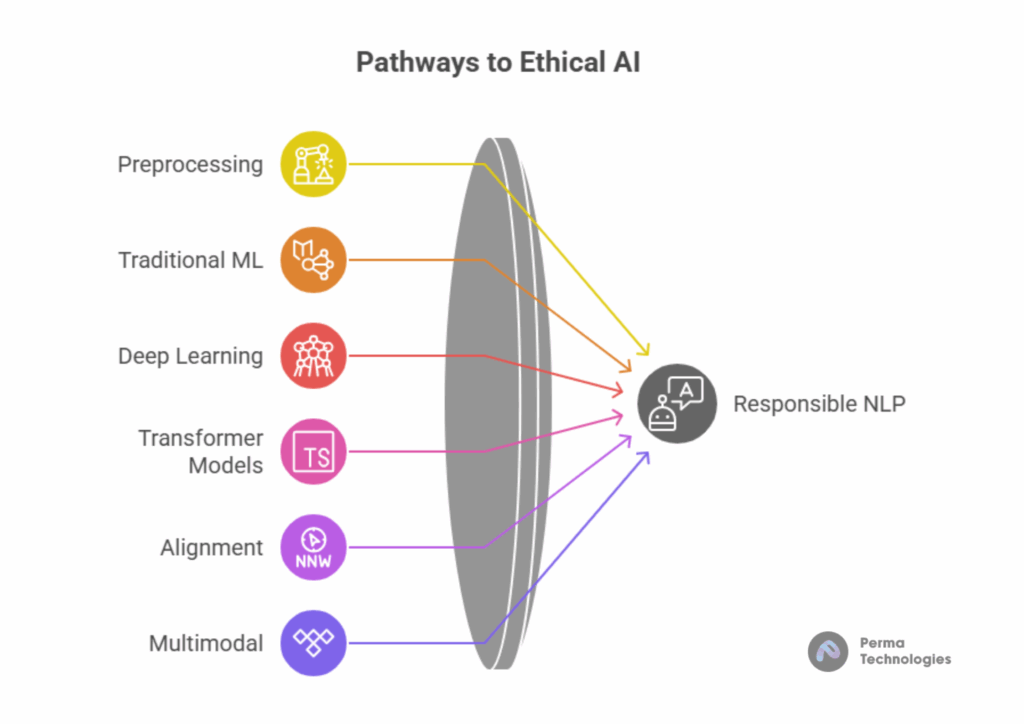

Summary Table of Key Algorithms

| Category | Algorithms | Primary Use |

| Preprocessing | Tokenization, Lemmatization | Text cleaning |

| Traditional Machine Learning | Naive Bayes, SVM | Classification |

| Deep Learning | RNN, LSTM, CNN | Sequence modeling |

| Transformer Models | BERT, GPT, T5 | Understanding & generation |

| Alignment | RLHF | Human feedback tuning |

| Multimodal | Gemini, Flamingo | Vision + Language |

| Ethics | Bias detection, privacy-preserving AI | Responsible Natural Language Processing |

Looking ahead, we can anticipate more efficient, explainable, and cross-modal NLP systems; importantly, these advancements will be grounded in the core AI algorithms that form their foundation.

Bonus: Tools to Explore

- Hugging Face Transformers: Pretrained model hub

- OpenAI API: GPT based language models

- AllenNLP: Modular NLP research platform

- spaCy: Industrial strength NLP in Python

- LangChain: Build LLM powered apps with chains and agents

Final Thoughts

From rule based systems to trillion parameter transformers, the evolution of Natural Language Processing algorithms is both rapid and remarkable. The key AI algorithms shaping Natural Language Processing today Transformers, attention mechanisms, RLHF and multimodal modeling are enabling machines to comprehend, converse and create like never before.